>>> def http(e):

... match e:

... case 400:

... print('Error 400')

... case 401:

... print('Auth request')

... case _:

... print('Unknow error')

...

>>> http(12)

Unknow error

>>> http(500)

Unknow error

>>> http(400)

Error 400

>>> def http(e):

... match e:

... case 400:

... print('Error 400')

... case 401:

... print('Auth request')

... case _:

... print('Unknow error')

...

>>> http(12)

Unknow error

>>> http(500)

Unknow error

>>> http(400)

Error 400

https://platform.openai.com/docs/guides/realtime-websocket?websocket-examples=python

>>> users

{'Hans': 'active', 'Éléonore': 'inactive', '景太郎': 'active'}

>>> [a for a in users.keys() if users.get(a)=='active']

['Hans', '景太郎']

>>> r

{'Name': 'Ambiorix', 'age': 34}

>>> {r[a]:a for a in r if type(r[a])==int}

{34: 'age'}

>>> {r[a]:a for a in r if type(r[a])==str}

{'Ambiorix': 'Name'}

>>>

>>>

>>> answer

'Ambiorix Rodriguez Placencio'

>>> chr(max({a:ord(a) for a in answer}.values()))

'z'

>>> chr(max({a:ord(a) for a in answer}.values()))

Code that modifies a collection while iterating over that same collection can be tricky to get right. Instead, it is usually more straight-forward to loop over a copy of the collection or to create a new collection:

# Create a sample collection

users = {'Hans': 'active', 'Éléonore': 'inactive', '景太郎': 'active'}

# Strategy: Iterate over a copy

for user, status in users.copy().items():

if status == 'inactive':

del users[user]

# Strategy: Create a new collection

active_users = {}

for user, status in users.items():

if status == 'active':

active_users[user] = statusimport os

import sys

def search_key_value(file_path, search_key):

# Open and read the file safely using a context manager

with open(file_path, 'r') as file:

lines = file.readlines()

# Create a dictionary from the parsed list

parsed_dict = {line.strip().split(',')[0]: line.strip().split(',')[1] for line in lines}

# Return the value associated with the search key if it exists

return parsed_dict.get(search_key)

# Example usage:

file_path = '/var/www/html/fwlist.conf'

sound_folder = '/var/lib/asterisk/sounds/en/custom/'

search_key = sys.argv[1]

file = search_key_value(file_path, search_key)

if file:

print(f"The value for key '{search_key}' is: {file}")

# Check if the corresponding file exists

file_path = sound_folder + file

print(1 if os.path.isfile(file_path) else 0)

else:

print(f"Key '{search_key}' not found.")

print(0)

>>> c

{'Ambiorix': 'Rodriguez', 'Katherine': 'Maria, 'Rosa: 'Perez'}

>>> [a for a in list(c.keys()) if 'k'.lower() in a.lower()]

['Katherine']

>>> [a for a in list(c.values()) if 'R'.lower() in a.lower()]

['Rodriguez']

https://github.com/AssemblyAI-Community/python-realtime-twilio-transcription/blob/main/main.py

try:

n = 10

if n < 20:

raise ValueError("n must be at least 20") # Raising a ValueError with a message

except ValueError as e:

print("An error has occurred:", e)

finally:

print("Code completed.")

--------

Custom Exception error.

class CustomError(Exception):

pass

try:

raise CustomError("This is a custom error message.")

except CustomError as e:

print("An error has occurred:", e)

finally:

print("Code completed.")

>>> words

['cat', 'window', 'defenestrate']

>>>

>>> {a:len(a) for a in words if len(a)>5}

{'window': 6, 'defenestrate': 12}

https://docs.python.org/3/tutorial/controlflow.html#if-statements

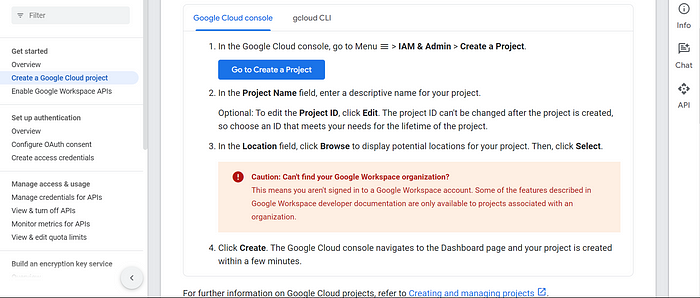

Go to the Google Cloud Console by clicking the link below :

The link will direct you to this page.

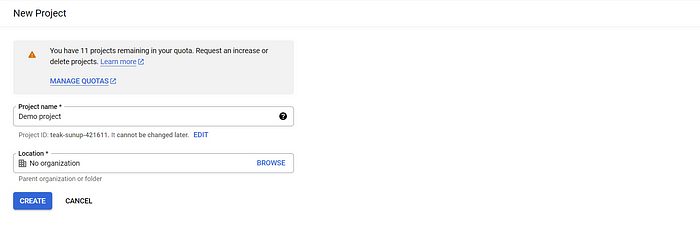

Create a new project by clicking ‘Go to create a project button on the above page’ or directly by using the link below. If you already have a project, you can select that one instead.

The link will direct you to this page. Enter a project name and create it.

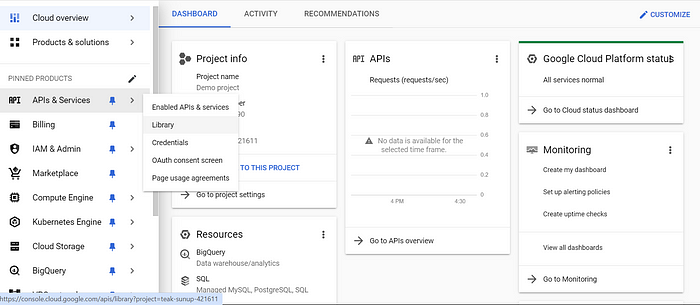

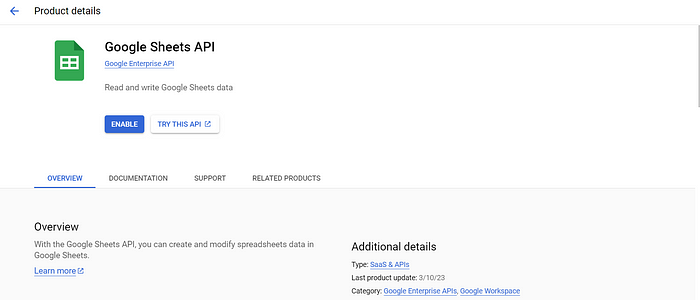

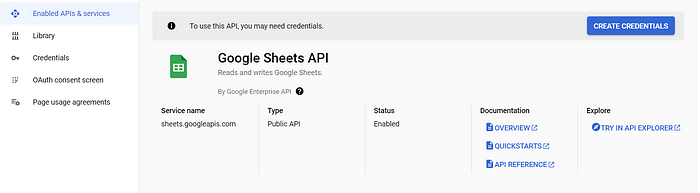

2. Enable the Google Sheets API

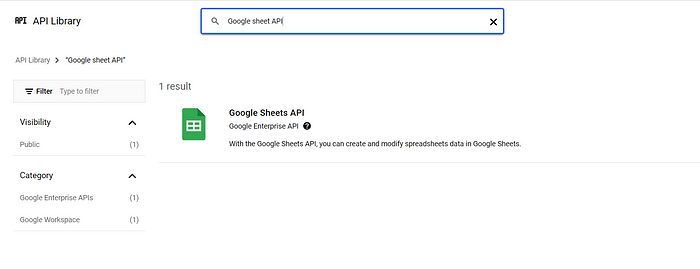

In the Cloud Console, navigate to the “APIs & Services” > “Library” section.

Search for “Google Sheets API” and enable it for your project.

Click on the ‘Enable’ button.

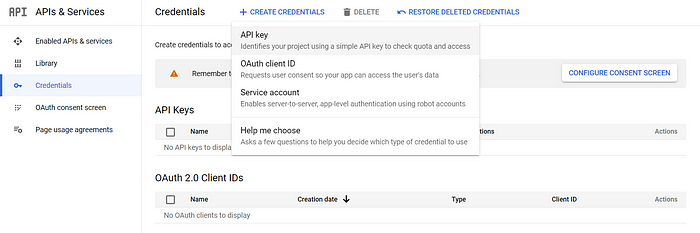

3. Create credentials

After enabling the API, go to the “APIs & Services” > “Credentials” section.

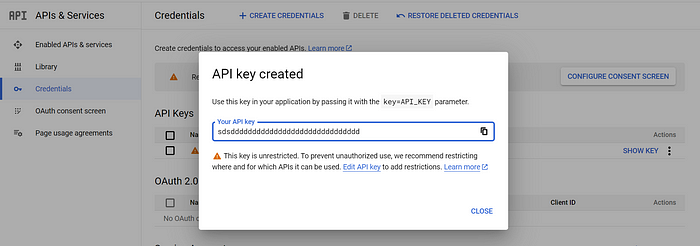

Click on the ‘Create credentials’ button and generate an API key.

Copy the API key for future use.

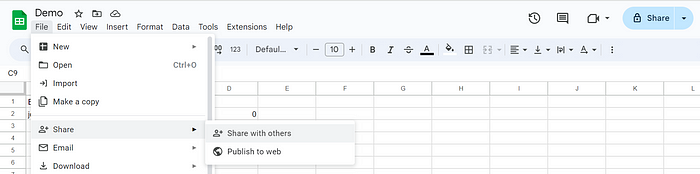

4. Manage Google Sheet Access Permissions

By default, Google Sheets are private. To make them accessible, you’ll need to share the Google Sheet publicly. Navigate to your Google Sheet and click on the ‘Share’ button.

Ensure you choose ‘Anyone with the link’ and set the access to ‘View.

5. Access Sheet Data

Below is the API endpoint to access sheet data. Please update the sheet ID, sheet name, and API key accordingly. You can find the sheet ID in the URL of your Google Sheet page. Look for the string of characters between ‘/d/’ and ‘/edit’ in the URL, and that is your sheet ID.

https://sheets.googleapis.com/v4/spreadsheets/{SHEET_ID}/values/{SHEET_NAME}!A1:Z?alt=json&key={API_KEY}Next, open the provided URL in your web browser, and you will receive a response similar to this.

{

'range': 'demo_sheet!A1:Z1000',

'majorDimension': 'ROWS',

'values': [

[

'Email id',

'Password',

'count',

'id'

],

[

'demo@gmail.com',

'demopass',

'60',

'0'

]

]

}6. Retrieve Sheet Data Using Python

Now, let’s write Python code to access this data.

import requests

def get_google_sheet_data(spreadsheet_id,sheet_name, api_key):

# Construct the URL for the Google Sheets API

url = f'https://sheets.googleapis.com/v4/spreadsheets/{spreadsheet_id}/values/{sheet_name}!A1:Z?alt=json&key={api_key}'

try:

# Make a GET request to retrieve data from the Google Sheets API

response = requests.get(url)

response.raise_for_status() # Raise an exception for HTTP errors

# Parse the JSON response

data = response.json()

return data

except requests.exceptions.RequestException as e:

# Handle any errors that occur during the request

print(f"An error occurred: {e}")

return None

# configurations

spreadsheet_id = ''

api_key = ''

sheet_name = "Sheet1"

sheet_data = get_google_sheet_data(spreadsheet_id,sheet_name, api_key)

if sheet_data:

print(sheet_data)

else:

print("Failed to fetch data from Google Sheets API.")That’s all for now. Thank you for reading! Please feel free to share your suggestions in the comments below.

https://medium.com/@techworldthink/accessing-google-sheet-data-with-python-a-practical-guide-using-the-google-sheets-api-dc57759d387a

class calculadora:

def __init__(self, x, y):

self.x = x

self.y = y

def suma(self):

return self.x + self.y

def mult(self):

return self.x * self.y

def div(self):

return self.x / self.y

def resta(self):

return self.x - self.y

c1 = calculadora(24, 5)

print(c1.suma())

print(c1.mult())

print(c1.div())

print(c1.resta())

Easy way

>>> d=dict(zip([1, 2, 3], ['sugar', 'spice', 'everything nice']))

>>> d

{1: 'sugar', 2: 'spice', 3: 'everything nice'}

>>> thedict={item[0]:item[1] for item in zip([1, 2, 3], ['sugar', 'spice', 'everything nice'])}

>>> words

['Ambiorix', 'RODRIGUEZ', 'Placencio', 42, 'AMIGo']

>>> [a for a in words if 'pl' in str(a).lower()]

['Placencio']

>>> [a for a in words if 'amb' in str(a).lower()]

['Ambiorix']

>>> [a for a in words if str(a).lower()=='amigo']

['AMIGo']

import requests

url = 'https://example.com'

response = requests.get(url)

if response.status_code == 200:

print("Success!")

elif response.status_code == 404:

print("Not Found!")

elif response.status_code == 500:

print("Server Error!")

# Add more status code checks as needed

else:

print(f"Received unexpected status code: {response.status_code}")

import requests

from bs4 import BeautifulSoup

import csv

import json

url = 'https://example.com/data' # Replace with actual URL

response = requests.get(url)

if response.status_code == 200:

content_type = response.headers.get('Content-Type', '')

if 'text/html' in content_type:

soup = BeautifulSoup(response.text, 'html.parser')

print("HTML content:")

print(soup.prettify())

elif 'application/json' in content_type:

data = response.json()

print("JSON data:")

print(data)

elif 'application/xml' in content_type or 'text/xml' in content_type:

soup = BeautifulSoup(response.content, 'xml')

print("XML content:")

print(soup.prettify())

elif 'text/plain' in content_type:

text_content = response.text

print("Plain text content:")

print(text_content)

elif 'text/csv' in content_type:

csv_content = response.text

print("CSV content:")

reader = csv.reader(csv_content.splitlines())

for row in reader:

print(row)

else:

print("Received unsupported content type:", content_type)

else:

print(f"Failed to retrieve data. Status code: {response.status_code}")

import glob

import subprocess

from prettytable import PrettyTable

from math import log # Import log from the math module

def convert_size(size_bytes):

"""Convert bytes to a human-readable format (KB, MB, GB)."""

if size_bytes == 0:

return "0 Bytes"

size_names = ["Bytes", "KB", "MB", "GB"]

i = int(log(size_bytes, 1024))

p = 1024 ** i

s = round(size_bytes / p, 2)

return f"{s} {size_names[i]}"

# Function to fetch and sort disk usage, then print a pretty table

def test(path, extension):

table = PrettyTable(field_names=["File", "Disk Usage"])

# Collect file sizes and names

usage_info = []

for file in glob.glob(f'{path}*.{extension}'):

if file:

usage = subprocess.getoutput(f'du -b "{file}"')

size, _ = usage.split() # Get size, ignore the rest

usage_info.append((file, int(size))) # Store as a tuple of (file, size)

# Sort by size (second element of the tuple)

usage_info.sort(key=lambda x: x[1], reverse=True)

# Add sorted data to the table with human-readable sizes

for file, size in usage_info:

human_readable_size = convert_size(size)

table.add_row([file, human_readable_size])

print(table)

# Get user input for directory path and file extension

path = input("Enter the directory path: ")

file_extension = input("Enter the file extension (without dot): ")

# Example usage

test(path, file_extension)

pip install prettytable

import glob

import subprocess

from prettytable import PrettyTable

# One-liner with pretty printing

test = lambda a, b: (lambda table: [table.add_row([file, subprocess.getoutput(f'du -h "{file}"')]) for file in glob.glob(f'{a}*.{b}') if file] and print(table))(

PrettyTable(field_names=["File", "Disk Usage"])

)

# Example usage

test('./', 'txt')

>>> c=glob.glob('*.wav')

>>> c

['tts_1.wav', 'ss-claro.wav', 'en-US-Studio-O.wav', 'no-disp.wav', 'ss-viva.wav']

>>>

>>> [subprocess.getoutput(f'du -h {a}').split() for a in c]

[['168K', 'tts_1.wav'], ['768K', 'ss-claro.wav'], ['96K', 'en-US-Studio-O.wav'], ['352K', 'no-disp.wav'], ['508K', 'ss-viva.wav']]

>>>

du -h *.wav

96K en-US-Studio-O.wav

352K no-disp.wav

------------

>>> fs=lambda a:glob.glob(f'*.{a}')

Read the excel file

>>> df = pd.read_excel('/home/ambiorixg12/Downloads/500.xlsx')

>>> f=open('/tmp/1.txt','r').readlines()

>>> [a.strip() for a in f if 'pe' in a.lower()]

>>> f=open('/tmp/1.txt','r')

>>> f.readlines()

['Ambiorix Rodriguez Placenio\n', 'Ruth Cedeno Lara\n', 'Katherine Montolio Perez\n', 'Armando Rodriguez Rosario\n']

do the magic

>>> f=open('/tmp/1.txt','r')

>>> [a.strip() for a in f.readlines()]

['Ambiorix Rodriguez Placenio', 'Ruth Cedeno Lara', 'Katherine Montolio Perez', 'Armando Rodriguez Rosario']

>>>

>>>

>>> f=open('/tmp/1.txt','r')

>>> [a.strip().upper() for a in f.readlines()]

['AMBIORIX RODRIGUEZ PLACENIO', 'RUTH CEDENO LARA', 'KATHERINE MONTOLIO PEREZ', 'ARMANDO RODRIGUEZ ROSARIO